Build your own AI

Using some cups, pen and paper you can build an AI that plays the game Nim. Train it by playing and it’ll improve until it becomes unbeatable. No computers required!

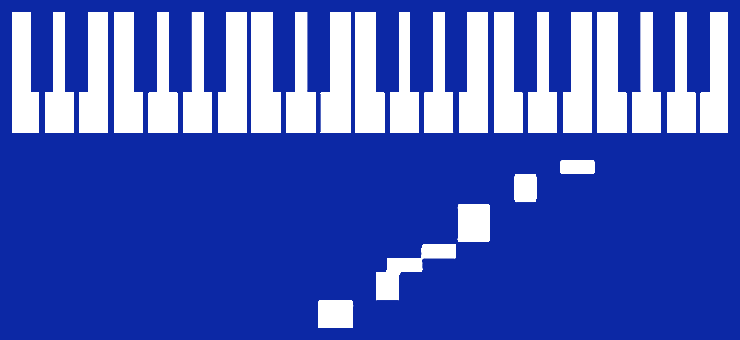

Nim is an ancient game with many variations. In this case we’ll use one of its simplest forms: Lay out ten pencils (or coins, buttons, etc.) in a row on the table. Two players take turns removing either one, two or three pencils. The player that takes the last pencil loses.

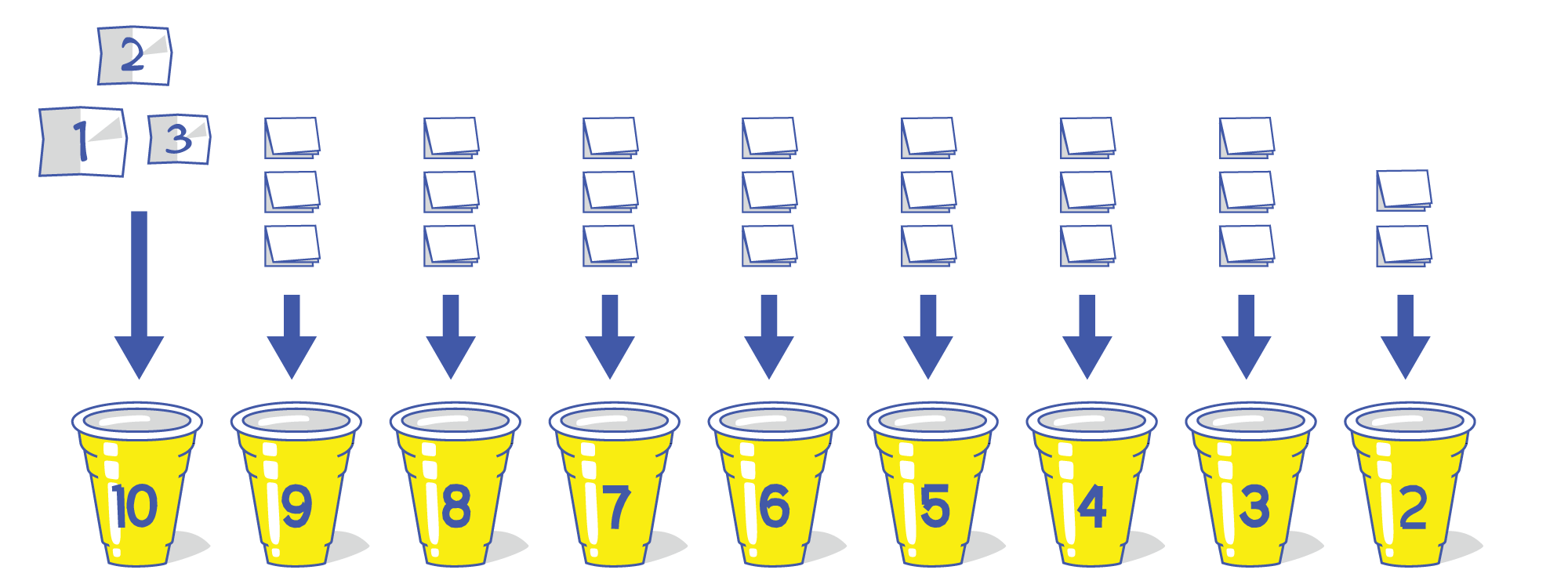

To build an artificial intelligence that can play Nim, get nine cups, label them with the numbers 10 through 2 and place them in a row in front of you. Cut three small square pieces of paper of approximately the same size and write the numbers 1, 2 and 3 in them. Fold each piece of paper in four and place them on cup number 10. Repeat this procedure until each cup has three pieces of paper numbered 1, 2, 3 inside. The last cup, number 2, only needs two pieces of paper with the numbers 1 and 2.

Now your AI is able to play Nim against you. Decide who goes first. When it’s the AI’s turn to play, count how many pencils are left and grab the cup with that number. Without looking, pick a piece of paper from inside, the number written on it is the number of pencils that the AI takes. Put the piece of paper back in the cup.

With this procedure, and your help handling the cups and pencils, the AI is able to make a decision on its turn. Its moves are always valid, but completely random. It should be easy to beat and sometimes the AI will even make extremely bad decisions (for instance taking three pencils when there are all that’s left). Now we need to train it and make it unbeatable.

Training your AI

Take turns with the AI being the first to play. Whenever you pick a piece of paper for the AI leave it next to the cup it came from. When the game finishes do the following:

- If the AI won, re-fold the papers and put them back inside each cup.

- If the AI lost, discard the piece of paper that made it take its last action instead of putting it back in the cup. The AI learned this was a bad choice, so it won’t repeat it. Fold the rest of the papers and put them inside their cups.

- However, if a cup only has one piece of paper in it, put it back and discard the piece of paper from the previous action instead. Cups should always have at least one piece of paper.

After playing enough times, each cup will end with only one piece of paper: the perfect move in that situation. The AI will be fully trained and will win whenever it’s the first to play, and lose only when you go first and play perfectly. Make it challenge other people who didn’t get as much practice as you did during this process, surprise your friends and family.

Although you didn’t use any electronics, this is the way that computer artificial intelligence systems are often trained: slowly learning, through a cycle of repetition and feedback, to avoid actions that lead to bad outcomes. This process doesn’t apply just for games, but AI systems for all sorts of applications.

Before its training, an AI has all the parts required for its decision making process to work (in this case the cups and pieces of paper) but no “knowledge” to guide it. Building an AI and training it are two separate and different processes. AI systems for complex tasks very often do not reach the “fully trained” state yours achieved in the end, but at some point they are deemed good enough to do real work outside of the lab (where, in some cases, they’ll continue to learn and improve).

Further experiments

This same technique can be used to build AIs that play other games. Our version of Nim can be in nine different “states”, hence nine cups, with three possible actions in each, one per piece of paper. Most games have a larger number of possible states and actions, so they require a more complex setup.

In 1961, researcher Donald Michie created MENACE, an AI built with 304 matchboxes and many colored beads that could play Tic Tac Toe. Its scheme is basically the same we used, except MENACE not only removes beads that caused a defeat but also adds beads on a victory to make its winning strategy more likely in the future.

In 2017 MENACE was rebuilt for the Manchester Science Festival where it quickly learned to win or draw every game by training against festival attendees:

While playing Nim you might have deduced a strategy to win through logic reasoning, without needing to fail in every possible way, or maybe noticed the pattern in the final numbers inside the cups of the fully trained AI. Humans can use deduction and abstraction to find patterns and solve problems while computers often rely on their ability to deal with short repetitive tasks very quickly.

Some games, like Chess and Go, have such complexity that no human has yet found a strategy to win every time. Computers also have trouble analyzing all their possible states. According to rough estimations we’d need almost 1050 cups (1050 is a 1 followed by 50 zeros, approximately the number of atoms on Earth) to teach an AI to play Chess in this fashion. These games and other problems of high complexity require AI approaches that find good solutions by discovering patterns and rules without examining all the possible game states, neural networks for example.

Some things to think about

- Computers can play some games perfectly, winning every time. The AIs in video games are sometimes programmed to play imperfectly so human players have a chance at winning, or to appear more life-like. In what other activities and ways does AI need to be “less than perfect”? Why do we, humans, need it?

- Our AI could learn to play Nim perfectly without having any understanding of its rules; It’s common for AIs to be able to learn to perform a task without any knowledge about its meaning. Should this be called “intelligence”? Is the idea that AIs “learn” a good metaphor? Are humans and other intelligent animals always aware of their decision making processes? Why is it good for humans to understand the things they learn to do?

In the I AM A.I. Exhibition

In the Turing Game Table exhibit we use a more complex version of Nim and a visitor’s help in playing the role of AI to continue exploring the ability of computers to perform tasks they don’t understand and how we relate to them.

Learn how to visit.

Text is available under the Creative Commons Attribution License.